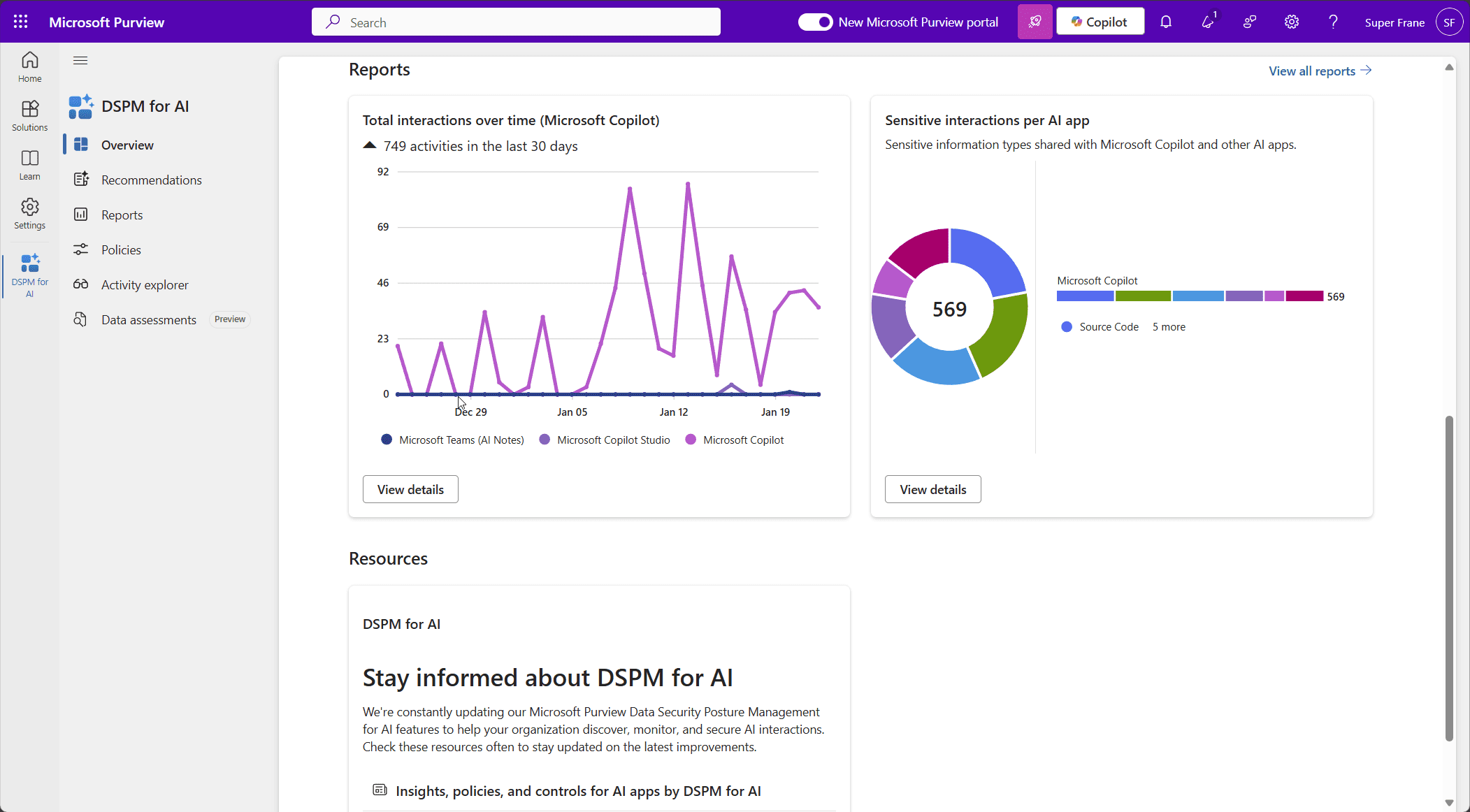

Microsoft Data Security Posture Management for AI

As artificial intelligence (AI) becomes increasingly integrated into organizational processes, the need for robust Data Security Posture Management tailored specifically for AI environments is more critical than ever. AI systems rely heavily on large datasets for training and decision-making, and these datasets often contain sensitive or proprietary information. Without proper security measures, the risk of data exposure, bias, and misuse can escalate significantly.

Key Challenges in Securing AI Data

- Sensitive Data Exposure: AI models are often trained on data that includes personally identifiable information (PII), trade secrets, or confidential business data. Without adequate security measures, this data could be exposed during training or storage.

- Data Integrity Risks: Manipulated or corrupted training data can lead to biased or unreliable AI outputs. Ensuring the integrity of data used in AI is a critical aspect of data security posture.

- Complex Data Flows: AI projects typically involve multiple stakeholders, environments, and tools, creating complex data flows that are difficult to monitor and secure.

- Regulatory Compliance: AI applications must comply with data protection laws such as GDPR, HIPAA, and other regional regulations. Ensuring compliance across AI data pipelines can be a daunting task.

How DSPM Supports AI Security

Microsoft’s DSPM provides an essential framework for managing data security in AI environments. Key features include:

- AI-Specific Data Discovery DSPM tools like Microsoft Purview can automatically identify datasets used in AI training and classify their sensitivity levels. This ensures organizations understand the nature of the data being fed into AI systems.

- Real-Time Monitoring and Threat Detection AI models are susceptible to attacks like data poisoning or model inversion. DSPM’s threat detection capabilities, integrated with Microsoft Defender, monitor AI workflows for suspicious activity and unauthorized data access.

- Access Control and Governance By applying granular access controls, DSPM ensures that only authorized users can access AI datasets. It also enforces governance policies to prevent misuse of sensitive data.

- Bias and Integrity Checks DSPM frameworks can include tools to detect anomalies or biases in datasets, ensuring that AI models are trained on accurate and fair data.

- Compliance Automation DSPM simplifies compliance by mapping AI data security measures to relevant regulations. Continuous monitoring ensures that AI systems adhere to legal and ethical standards.

- End-to-End Visibility DSPM provides a unified dashboard that tracks the security posture of data across AI projects. This includes training datasets, model storage, and API interactions.